缺页异常:

也叫缺页中断,页错误,是操作系统虚拟内存管理重要的一种机制,属于处理器的同步异常;

访问虚拟内存的时候,虚拟地址和物理地址没有建立映射关系,或者有访问权限错误发生时,会触发缺页异常;

内核必须处理此异常,而且对于进程来说时透明的。

缺页异常实现过程

缺页异常,属于同步异常,触发时,CPU会自动跳转到异常向量表;

异常向量表入口地址在arch/arm64/kernel/entry.S

相关源码文件

异常入口:arch/arm64/kernel/entry.S

arm64架构处理:arch/arm64/mm/fault.c

通用代码: mm/memory.c

总体调用过程:

vectors ///arch/arm64/kernel/entry.S架构相关

->el1_sync

->el1_sync_handler

->el1_abort

->do_mem_abort ///arch/arm64/mm/fault.c 架构相关

->do_page_fault

->__do_page_fault

->handle_mm_fault ///mm/memory.c架构无关

->__handle_mm_fault

->handle_pte_fault

->do_annoymous_page ///匿名映射缺页异常

->do_fault ///文件映射缺页异常

->do_swap_page ///swap缺页异常

->do_wp_page ///写时复制缺页异常

源码分析

异常处理汇编入口:

/*

* Exception vectors.

*/

.pushsection ".entry.text", "ax"

.align 11

SYM_CODE_START(vectors)

kernel_ventry 1, sync_invalid // Synchronous EL1t

kernel_ventry 1, irq_invalid // IRQ EL1t

kernel_ventry 1, fiq_invalid // FIQ EL1t

kernel_ventry 1, error_invalid // Error EL1t

kernel_ventry 1, sync // Synchronous EL1h ///linux异常向量入口,这里是同步异常,kernel_ventry宏展开为el1_sync

kernel_ventry 1, irq // IRQ EL1h

kernel_ventry 1, fiq // FIQ EL1h

kernel_ventry 1, error // Error EL1h

...

el1_sync函数:

SYM_CODE_START_LOCAL_NOALIGN(el1_sync)

kernel_entry 1 ///保存上下文

mov x0, sp ///sp作为参数

bl el1_sync_handler

kernel_exit 1 ///恢复上下文

SYM_CODE_END(el1_sync)

el1_sync_handler函数:

asmlinkage void noinstr el1_sync_handler(struct pt_regs *regs)

{

unsigned long esr = read_sysreg(esr_el1);

switch (ESR_ELx_EC(esr)) { ///读取esr_el1的EC域,判断异常类型

case ESR_ELx_EC_DABT_CUR: ///0x25,表示来自当前的异常等级的数据异常

case ESR_ELx_EC_IABT_CUR:

el1_abort(regs, esr); ///数据异常入口

break;

...

}

do_mem_abort()->do_page_fault()

static int __kprobes do_page_fault(unsigned long far, unsigned int esr,

struct pt_regs *regs)

{

const struct fault_info *inf;

struct mm_struct *mm = current->mm;

vm_fault_t fault;

unsigned long vm_flags;

unsigned int mm_flags = FAULT_FLAG_DEFAULT;

unsigned long addr = untagged_addr(far);

if (kprobe_page_fault(regs, esr))

return 0;

/*

* If we're in an interrupt or have no user context, we must not take

* the fault.

*/ ///检查异常发生时的路径, 若不合条件,直接跳转no_context,出错处理

if (faulthandler_disabled() || !mm) ///是否关闭缺页中断,是否在中断上下文,是否内核空间,内核进程的mm总是NULL

goto no_context;

if (user_mode(regs))

mm_flags |= FAULT_FLAG_USER;

/*

* vm_flags tells us what bits we must have in vma->vm_flags

* for the fault to be benign, __do_page_fault() would check

* vma->vm_flags & vm_flags and returns an error if the

* intersection is empty

*/

if (is_el0_instruction_abort(esr)) { ///判断是否为低异常等级的指令异常,若为指令异常,说明该地址具有可执行权限

/* It was exec fault */

vm_flags = VM_EXEC;

mm_flags |= FAULT_FLAG_INSTRUCTION;

} else if (is_write_abort(esr)) { ///写内存区错误

/* It was write fault */

vm_flags = VM_WRITE;

mm_flags |= FAULT_FLAG_WRITE;

} else {

/* It was read fault */

vm_flags = VM_READ;

/* Write implies read */

vm_flags |= VM_WRITE;

/* If EPAN is absent then exec implies read */

if (!cpus_have_const_cap(ARM64_HAS_EPAN))

vm_flags |= VM_EXEC;

}

///判断是否为用户空间,是否EL1权限错误,都满足时,表明处于少见的特殊情况,直接报错处理

if (is_ttbr0_addr(addr) && is_el1_permission_fault(addr, esr, regs)) {

if (is_el1_instruction_abort(esr))

die_kernel_fault("execution of user memory",

addr, esr, regs);

if (!search_exception_tables(regs->pc))

die_kernel_fault("access to user memory outside uaccess routines",

addr, esr, regs);

}

perf_sw_event(PERF_COUNT_SW_PAGE_FAULTS, 1, regs, addr);

/*

* As per x86, we may deadlock here. However, since the kernel only

* validly references user space from well defined areas of the code,

* we can bug out early if this is from code which shouldn't.

*/ ///执行到这里,可以断定缺页异常没有发生在中断上下文,没有发生在内核线程,以及一些特殊情况;接下来检查由于地址空间引发的缺页异常

if (!mmap_read_trylock(mm)) {

if (!user_mode(regs) && !search_exception_tables(regs->pc))

goto no_context;

retry:

mmap_read_lock(mm); ///睡眠,等待锁释放

} else {

/*

* The above mmap_read_trylock() might have succeeded in which

* case, we'll have missed the might_sleep() from down_read().

*/

might_sleep();

#ifdef CONFIG_DEBUG_VM

if (!user_mode(regs) && !search_exception_tables(regs->pc)) {

mmap_read_unlock(mm);

goto no_context;

}

#endif

}

///进一步处理缺页以异常

fault = __do_page_fault(mm, addr, mm_flags, vm_flags, regs);

...

no_context:

__do_kernel_fault(addr, esr, regs); ///报错处理

return 0;

}

__do_page_fault()

static vm_fault_t __do_page_fault(struct mm_struct *mm, unsigned long addr,

unsigned int mm_flags, unsigned long vm_flags,

struct pt_regs *regs)

{

struct vm_area_struct *vma = find_vma(mm, addr); ///查找失效地址addr对应的vma

if (unlikely(!vma)) ///找不到vma,说明addr还没在进程地址空间中,返回VM_FAULT_BADMAP

return VM_FAULT_BADMAP;

/*

* Ok, we have a good vm_area for this memory access, so we can handle

* it.

*/

if (unlikely(vma->vm_start > addr)) { ///vm_start>addr,特殊情况(栈,向下增长),检查能否vma扩展到addr,否则报错

if (!(vma->vm_flags & VM_GROWSDOWN))

return VM_FAULT_BADMAP;

if (expand_stack(vma, addr))

return VM_FAULT_BADMAP;

}

/*

* Check that the permissions on the VMA allow for the fault which

* occurred.

*/

if (!(vma->vm_flags & vm_flags)) ///判断vma属性,无权限,直接返回

return VM_FAULT_BADACCESS;

return handle_mm_fault(vma, addr & PAGE_MASK, mm_flags, regs); ///缺页中断处理的核心处理函数

}

handle_mm_fault()->__handle_mm_fault()

进入通用代码处理阶段

* By the time we get here, we already hold the mm semaphore

*

* The mmap_lock may have been released depending on flags and our

* return value. See filemap_fault() and __lock_page_or_retry().

*/

static vm_fault_t __handle_mm_fault(struct vm_area_struct *vma,

unsigned long address, unsigned int flags)

{

struct vm_fault vmf = { ///构建vma描述结构体

.vma = vma,

.address = address & PAGE_MASK,

.flags = flags,

.pgoff = linear_page_index(vma, address),

.gfp_mask = __get_fault_gfp_mask(vma),

};

unsigned int dirty = flags & FAULT_FLAG_WRITE;

struct mm_struct *mm = vma->vm_mm;

pgd_t *pgd;

p4d_t *p4d;

vm_fault_t ret;

pgd = pgd_offset(mm, address); ///计算pgd页表项

p4d = p4d_alloc(mm, pgd, address); ///计算p4d页表项,这里等于pgd

if (!p4d)

return VM_FAULT_OOM;

vmf.pud = pud_alloc(mm, p4d, address); ///计算pud页表项

if (!vmf.pud)

return VM_FAULT_OOM;

...

vmf.pmd = pmd_alloc(mm, vmf.pud, address); ///计算pmd页表项

if (!vmf.pmd)

return VM_FAULT_OOM;

...

return handle_pte_fault(&vmf); ///进入通用代码处理

}

handle_pte_fault()

分类处理各种类型缺页异常

static vm_fault_t handle_pte_fault(struct vm_fault *vmf)

{

pte_t entry;

if (unlikely(pmd_none(*vmf->pmd))) {

/*

* Leave __pte_alloc() until later: because vm_ops->fault may

* want to allocate huge page, and if we expose page table

* for an instant, it will be difficult to retract from

* concurrent faults and from rmap lookups.

*/

vmf->pte = NULL;

} else {

/*

* If a huge pmd materialized under us just retry later. Use

* pmd_trans_unstable() via pmd_devmap_trans_unstable() instead

* of pmd_trans_huge() to ensure the pmd didn't become

* pmd_trans_huge under us and then back to pmd_none, as a

* result of MADV_DONTNEED running immediately after a huge pmd

* fault in a different thread of this mm, in turn leading to a

* misleading pmd_trans_huge() retval. All we have to ensure is

* that it is a regular pmd that we can walk with

* pte_offset_map() and we can do that through an atomic read

* in C, which is what pmd_trans_unstable() provides.

*/

if (pmd_devmap_trans_unstable(vmf->pmd))

return 0;

/*

* A regular pmd is established and it can't morph into a huge

* pmd from under us anymore at this point because we hold the

* mmap_lock read mode and khugepaged takes it in write mode.

* So now it's safe to run pte_offset_map().

*/

vmf->pte = pte_offset_map(vmf->pmd, vmf->address); ///计算pte页表项

vmf->orig_pte = *vmf->pte; ///读取pte内容到vmf->orig_pte

/*

* some architectures can have larger ptes than wordsize,

* e.g.ppc44x-defconfig has CONFIG_PTE_64BIT=y and

* CONFIG_32BIT=y, so READ_ONCE cannot guarantee atomic

* accesses. The code below just needs a consistent view

* for the ifs and we later double check anyway with the

* ptl lock held. So here a barrier will do.

*/

barrier(); ///有的处理器PTE会大于字长,所以READ_ONCE()不能保证原子性,添加内存屏障以保证正确读取了PTE内容

if (pte_none(vmf->orig_pte)) {

pte_unmap(vmf->pte);

vmf->pte = NULL;

}

}

///pte为空

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma))

return do_anonymous_page(vmf); ///处理匿名映射

else

return do_fault(vmf); ///文件映射

}

///pte不为空

if (!pte_present(vmf->orig_pte)) ///pte存在,但是不在内存中,从交换分区读回页面

return do_swap_page(vmf);

if (pte_protnone(vmf->orig_pte) && vma_is_accessible(vmf->vma)) ///处理numa调度页面

return do_numa_page(vmf);

vmf->ptl = pte_lockptr(vmf->vma->vm_mm, vmf->pmd);

spin_lock(vmf->ptl);

entry = vmf->orig_pte;

if (unlikely(!pte_same(*vmf->pte, entry))) {

update_mmu_tlb(vmf->vma, vmf->address, vmf->pte);

goto unlock;

}

if (vmf->flags & FAULT_FLAG_WRITE) { ///FAULT_FLAG_WRITE标志,根据ESR_ELx_WnR设置

if (!pte_write(entry))

return do_wp_page(vmf); ///vma可写,pte只读,触发缺页异常,父子进程共享的内存,写时复制

entry = pte_mkdirty(entry);

}

entry = pte_mkyoung(entry); ///访问标志位错误,设置PTE_AF位

if (ptep_set_access_flags(vmf->vma, vmf->address, vmf->pte, entry, ///更新PTE和缓存

vmf->flags & FAULT_FLAG_WRITE)) {

update_mmu_cache(vmf->vma, vmf->address, vmf->pte);

} else {

/* Skip spurious TLB flush for retried page fault */

if (vmf->flags & FAULT_FLAG_TRIED)

goto unlock;

/*

* This is needed only for protection faults but the arch code

* is not yet telling us if this is a protection fault or not.

* This still avoids useless tlb flushes for .text page faults

* with threads.

*/

if (vmf->flags & FAULT_FLAG_WRITE)

flush_tlb_fix_spurious_fault(vmf->vma, vmf->address);

}

unlock:

pte_unmap_unlock(vmf->pte, vmf->ptl);

return 0;

}

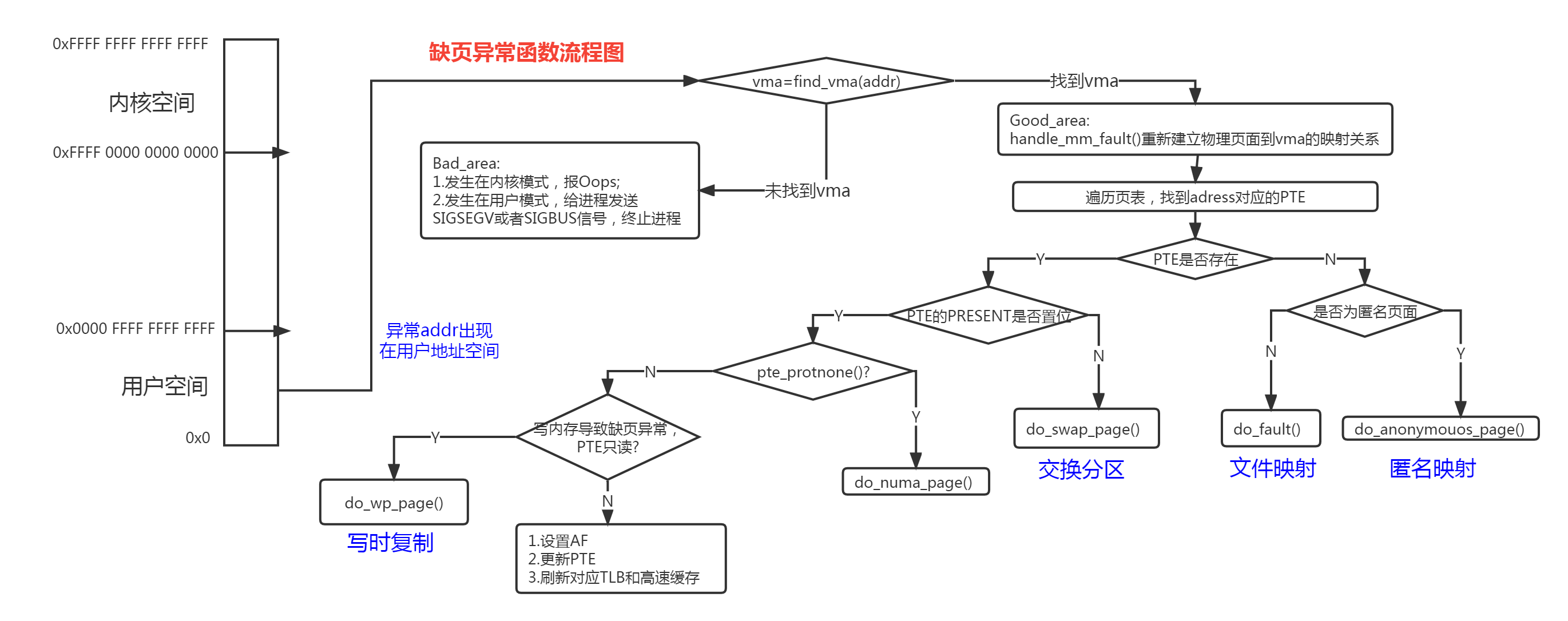

到这里,进入通用代码部分,涉及页面处理相当复杂,参考以下流程图:

缺页异常流程图:

主要缺页异常类型:

匿名映射缺页异常

文件映射缺页异常

swap缺页异常

写时复制缺页异常

匿名映射:无文件支持的内存映射,把物理内存映射到进程的虚拟地址空间,如进程堆、栈。

文件映射:有文件支持的内存映射,将文件的一个区间映射到进程的虚拟地址空间,数据来源是存储设备上的文件,如mmap映射文件。

主缺页:缺页异常时,需要做IO 操作才能获得所需要的数据页,如从磁盘读取文件页(VM_FAULT_MAJOR)。

次缺页:缺页异常时,不需要做IO操作就能获得所需要的数据页,如从page cache查找到页。

交换:是指先将内存某部分的程序或数据写入外存交换区(swap分区或文件),使用的时候再从外存交换区中调入指定的程序或数据到内存中来,并让其执行的一种内存扩充技术。

写实复制:(Copy-on-write)进程试图访问虚拟内存可写而页表没有写权限的页时,处理器发生缺页异常,将分配新的页面并复制旧的页面到新页面。